Cognitive Biases and AI

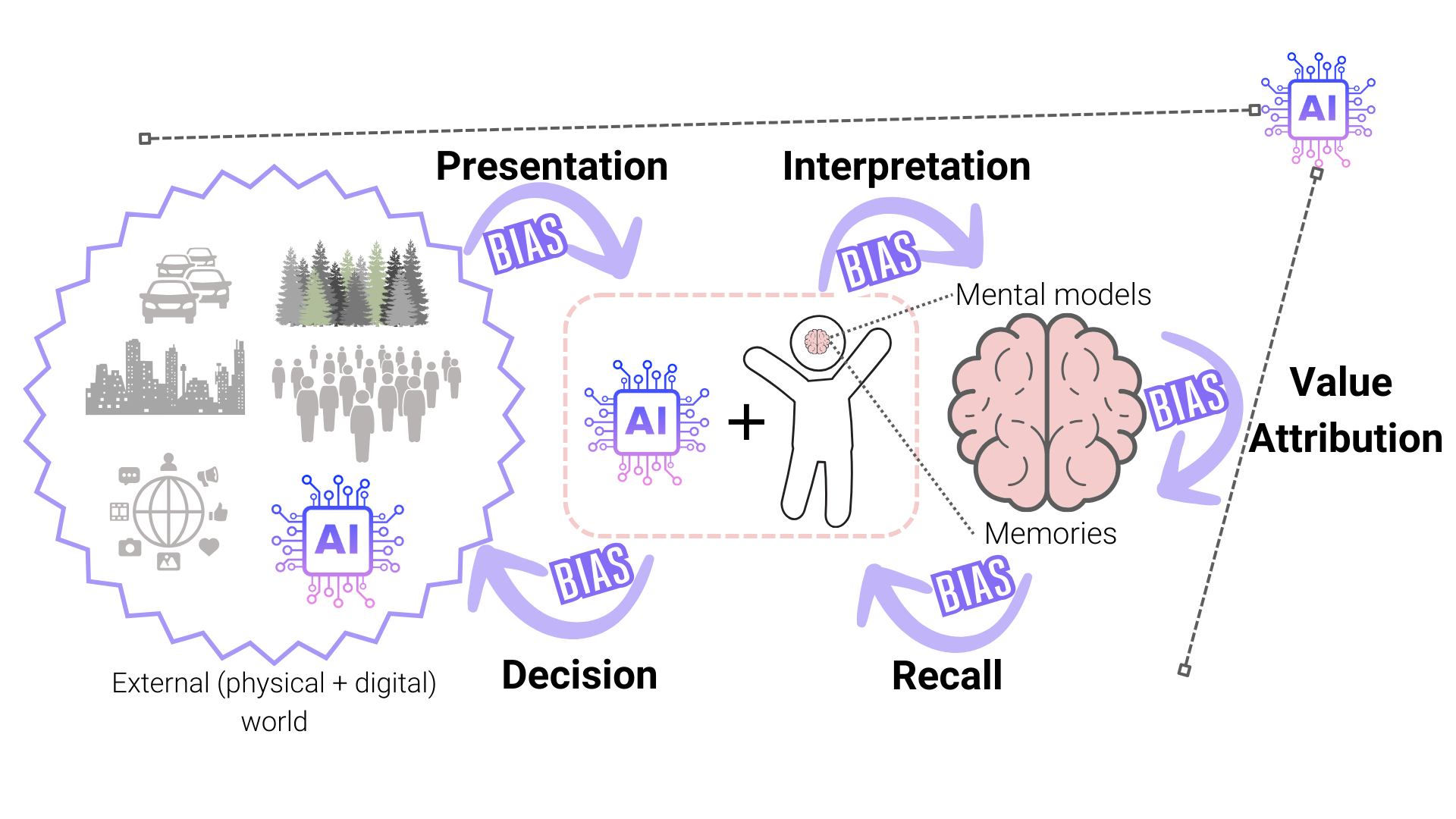

Human perception, memory and decision-making are impacted by tens of cognitive biases and heuristics that influence our actions and decisions. Despite the pervasiveness of such biases, they are generally not leveraged by today's Artificial Intelligence (AI) systems that model human behavior and interact with humans. The focus of our work here is to help bridge this gap.

We propose that the future of human-machine collaboration will entail the development of AI systems that model, understand and possibly replicate human cognitive biases and heuristics. Instead of viewing these biases and heuristics as flaws that exist in our decision making abilities, my work looks at them as a systemic pattern of deviation that can, and should, be accounted for by AI systems that are working with humans.

Incorporating knowledge of these biases into the design of AI systems is non-trivial for multiple reasons, one of which is the large number of biases that have been studied across multiple domains. To deal with this issue and to make the problem more tractable, we proposed a taxonomy of cognitive biases and heuristics that is centered on the perspective of AI systems. A visual representation of this taxonomy can be seen in the figure below:

Research Directions

In our work, we also proposed an outline for research directions for this area. These fall into three broad categories:

Area 1. Human-AI Interaction

Given the extensive interaction between humans and AI systems it is important to understand how human cognitive biases impact human-AI interaction. We focus on four primary research questions in this area:

- RQ1: Do interactions with AI systems exhibit the same biases as those observed in human-to-human interactions?

- RQ2: What is the interplay between trust and AI-based systems that account for cognitive biases?

- RQ3: Are AI-based systems that incorporate cognitive biases easier to understand and interpret?

- RQ4: Are AI systems that account for these cognitive biases better cognitive aids than AI systems that do not consider cognitive biases?

Area 2. Cognitive Biases in AI systems

Given that humans have successfully been using these biases and heuristics for a very long time, we wonder if they could be beneficial for AI systems. In particular, we focus on the following question in this area:

- RQ5: Could AI algorithms leverage human cognitive biases to learn robust and efficiently from small datasets?

Area 3. Computational Modeling of Cognitive Biases

A key element of answering these questions is building models of these cognitive biases for computational systems. We propose the following research questions in this area:

- RQ6: What AI approaches are suitable to automatically identify cognitive biases from observed human behavior?

- RQ7: How should AI systems support humans in mitigating their biases?

The Beauty Survey

We are currently working on addressing some of these questions using the attractiveness halo effect i.e., the cognitive bias that makes humans associate positive attributes with people who are perceived as attractive. For this work, we are currently running a large scale user study (that takes participants 15 minutes to complete and is anonymous!) called the beauty survey.

Please help us out by answering the survey and sending it to as many people as possible :)

Link to the survey: Coming soon!

Our work in the media

The Guardian: Photo filters: why are women who use them judged so harshly?

Der Spiegel: Wer schöner ist, wirkt schlauer

LuzDeMelilla: Los científicos revelan el error fotográfico común que podría hacer que la gente piense que eres estúpido

NTV: Sosyal medya filtreleri kişiliğimizle oynuyor: Değişen sadece güzellik değil

Our scientific publications

2022

2023

2024

Milan, IT

2025

Philadelphia, US

Eindhoven, NL

Madrid, ES

Alicante, ES