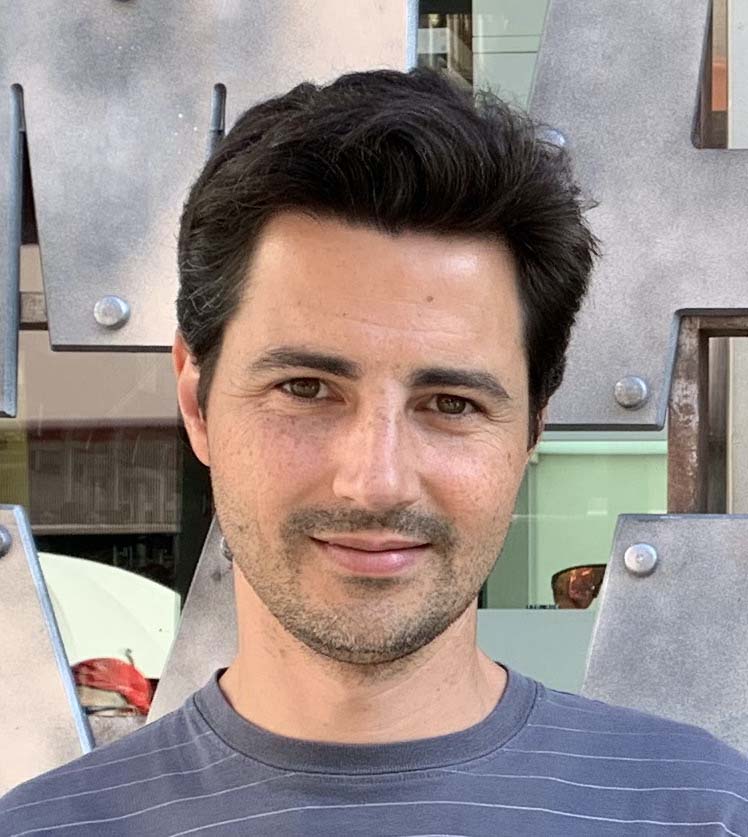

Miguel Angel Lozano

Associate Scientist

Miguel Angel Lozano holds a Degree (2001) and a PhD (2008) in Computer Engineering from the University of Alicante. In 2002 he obtained a FPI research grant, and since 2004 he has been a lecturer at the Department of Computer Science and Artificial Intelligence of the University of Alicante. He has conducted research stays at the Computer Vision & Pattern Recognition Lab of the University of York, as well as the Bioinformatics Lab at the University of Helsinki. From 2002 to 2010, Miguel Ángel Lozano Ortega developed his research within the Robot Vision Group (RVG), and in 2010 he joined the Mobile Vision Research Lab (MVRLab). He is currently the head of MVRLab, a group that focuses on pattern recognition and computer vision on mobile devices, the director of the Master’s Degree in Software Development for Mobile Devices, and the Coordinator for Quality Assurance and Educational Innovation at the Polytechnic School. His research interests include pattern recognition, graph matching and clustering, and computer vision. In 2008 he defended his PhD Thesis, entitled “Kernelized Graph Matching and Clustering”. Within MVRLab and in collaboration with Neosistec, some applications aimed to assist blind people have been developed: Aerial Obstacle Detection (AOD), SuperVision, and NaviLens. AOD and NaviLens won the “Application Mobile for Good” award in the 7th and 11th Vodafone Foundation Awards (2014, 2017), respectively.

In 2020, he joined the Data Science against COVID19 taskforce of the Valencian Government. This team participated and won first prize in the XPRIZE Pandemic Response Challenge. Moreover, he is tutoring two ELLIS PhD students at ELLIS Alicante.

Publications in association with ELLIS Alicante

2025

Dubrovnik, HR