A Sociotechnical Approach to Trustworthy AI

TLDR; Artificial Intelligence now mediates how we see, buy, connect, and decide.

From decision in high-stakes domains (healthcare, employment, justice) to recommendations in social-networks, AI increasingly determines social outcomes.

But with this power come risks: bias, opacity, and misalignment with human values.

This project, based on Adrian Arnaiz’s doctoral thesis A Sociotechnical Approach to Trustworthy AI: From Algorithms to Regulation, investigates how to make AI technically robust, socially aligned, and legally compliant.

What Is Trustworthy AI?

AI can generate algorithmic harms that affect individuals, groups, and institutions:

- Performance & allocation harms — unequal accuracy or opportunity distribution.

- Stereotype, denigration & representation harms — biased associations or visibility gaps.

- Procedural harms — opaque, unappealable automated decisions.

- Social-network harms — polarization, radicalization, exposure imbalance.

- Interaction harms — over-reliance in human–AI teams leading to degraded outcomes.

- Regulatory harms — misalignment between technical realities and legal requirements.

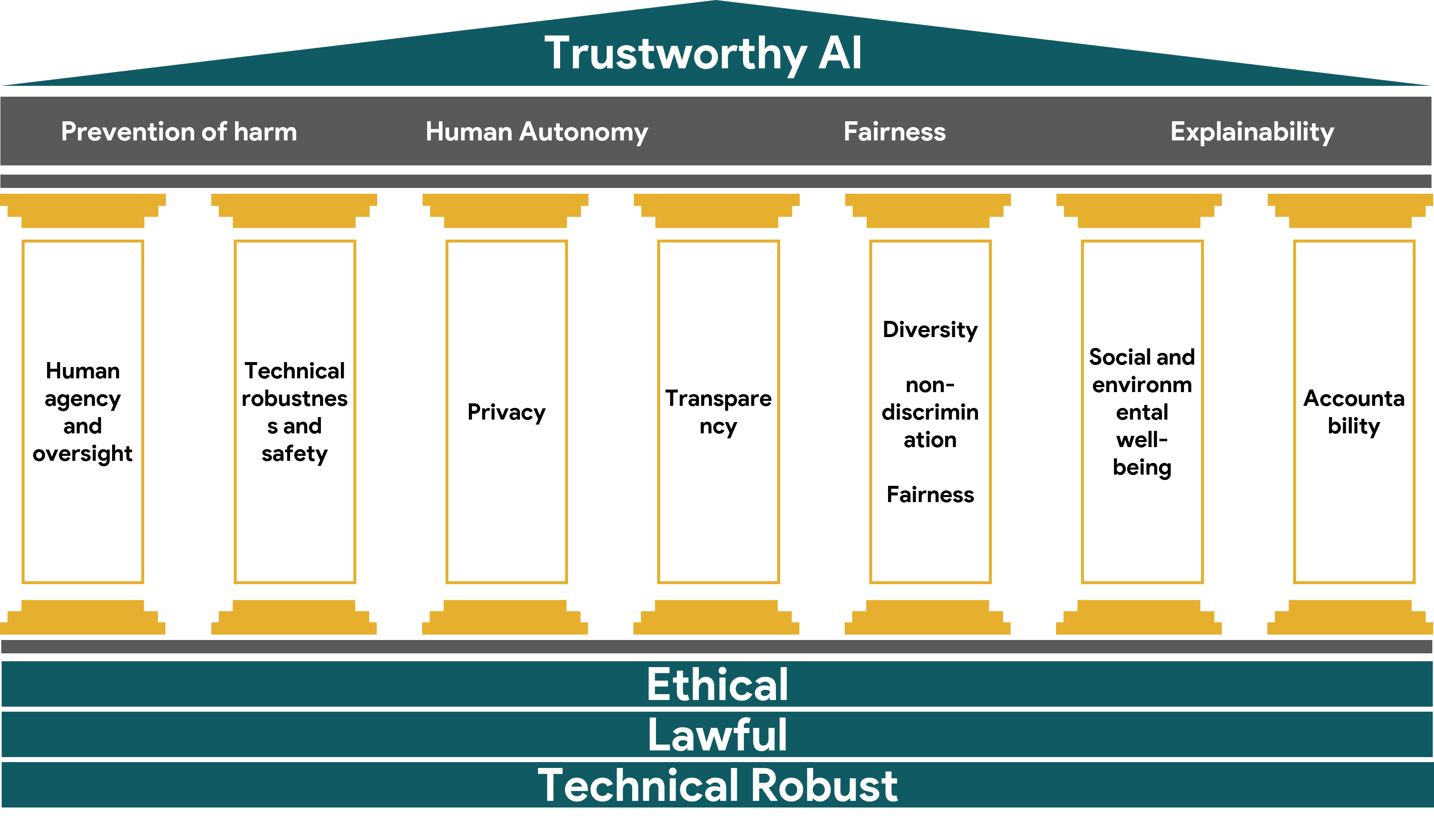

Therefore, many companies, academic institutions, and governments advocate for Trustworthy AI (TAI) as a guiding framework to mitigate these risks. The European notion of Trustworthy AI (TAI) was articulated by the EU HLEG on AI (2019) and is now embedded in regulations like the Digital Services Act (2022) and the AI Act (2024).

TAI rests on three components — lawful, ethical, robust — grounded in four ethical principles (autonomy, prevention of harm, fairness, explicability) and implemented via seven requirements: human oversight; technical robustness and safety; privacy and data governance; transparency; diversity and non-discrimination; societal and environmental well-being; accountability.

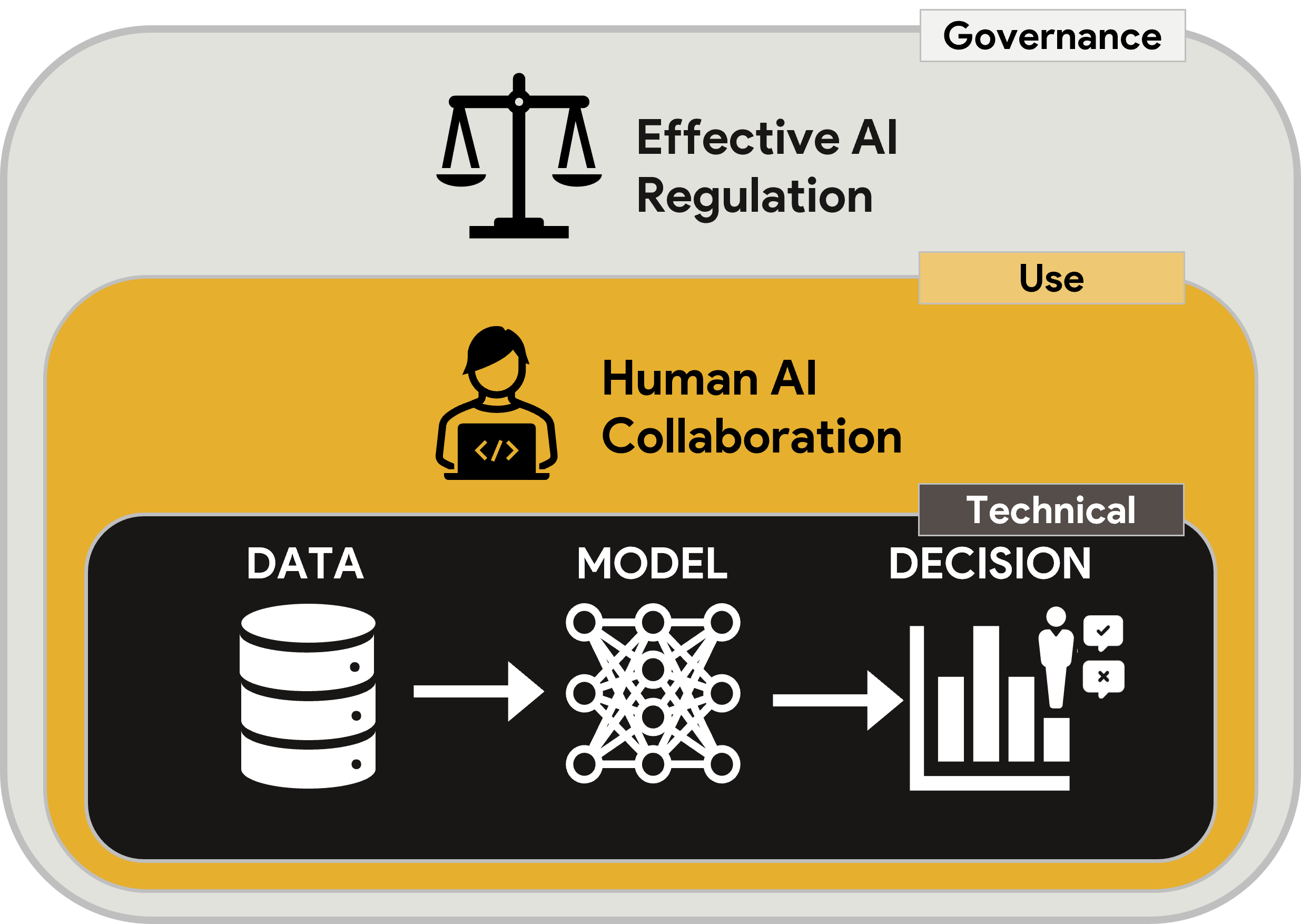

AI-related harms do not arise from algorithms alone but from the interaction between technical design, human behavior, and institutional context. Ensuring Trustworthy AI is therefore a sociotechnical problem: it requires simultaneous progress in the design of algorithms and data, the way humans use and oversee them, and the governance structures that regulate their deployment. The following sections examine each of these three spheres—technical, use, and governance—to show how their integration enables the effective implementation of Trustworthy AI.

1. Technical Sphere: Data and Structural Fairness

Fairness definitions.: There are multiple definitions of fairness in machine learning, each capturing distinct notions of equity depending on the task and context. Broadly, they can be grouped into group fairness and individual fairness metrics. Group fairness measures disparities in the expected outcomes or effects of algorithms across socially defined groups, while individual fairness requires treating similar individuals similarly. We will show examples on group fairness, as these definitions align more closely with societal notions of fairness and non-discrimination embedded in current legal frameworks and AI regulations.

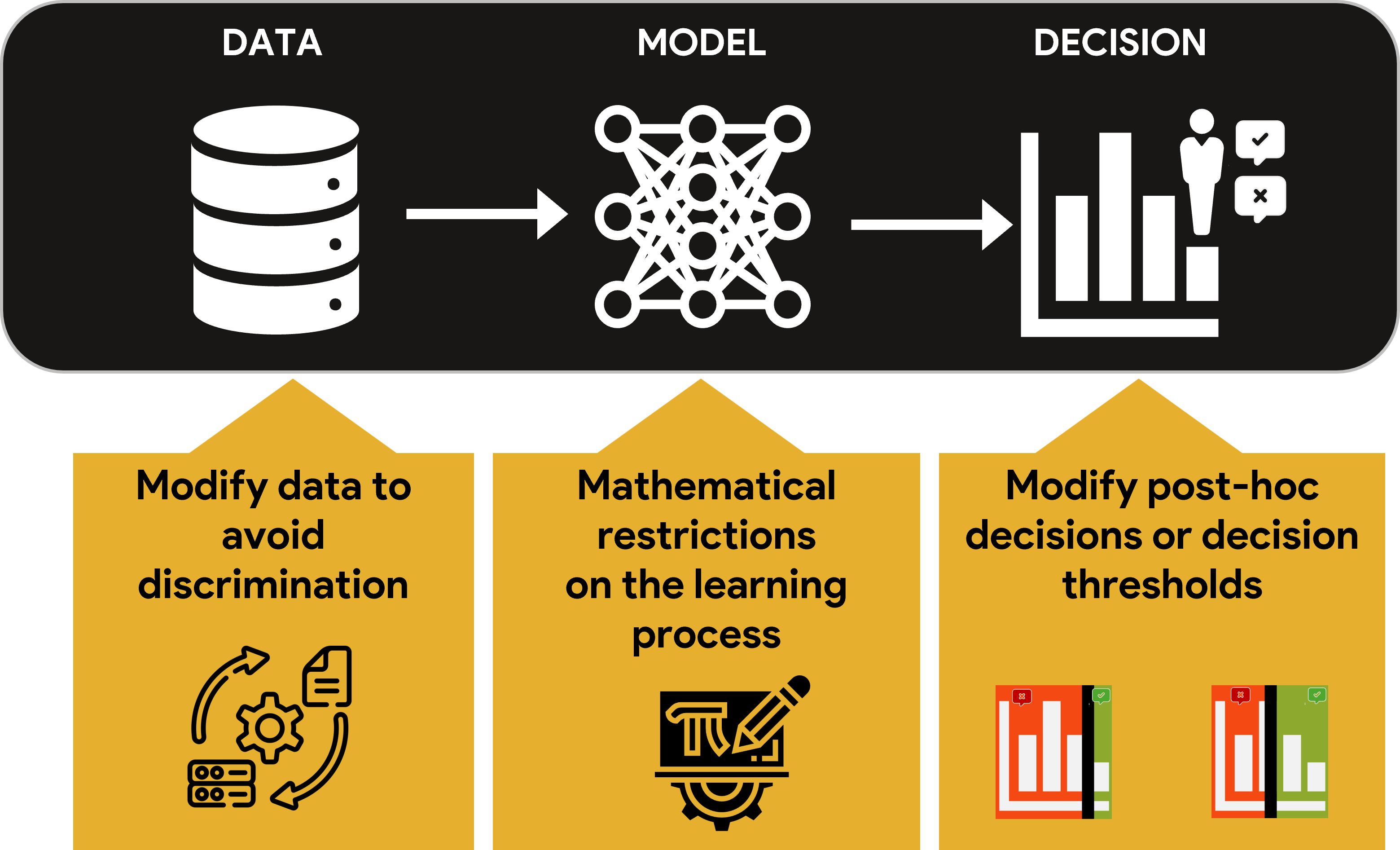

Mitigation levels.

In practice, bias can be reduced or compensated for at different stages of the model pipeline:

- Pre-processing: modifying or reweighting data distributions to reduce pre-existing biases before training.

- In-processing: incorporating fairness constraints or regularization terms directly in the model optimization.

- Post-processing: adjusting decision thresholds or outcomes after prediction to balance disparities across groups.

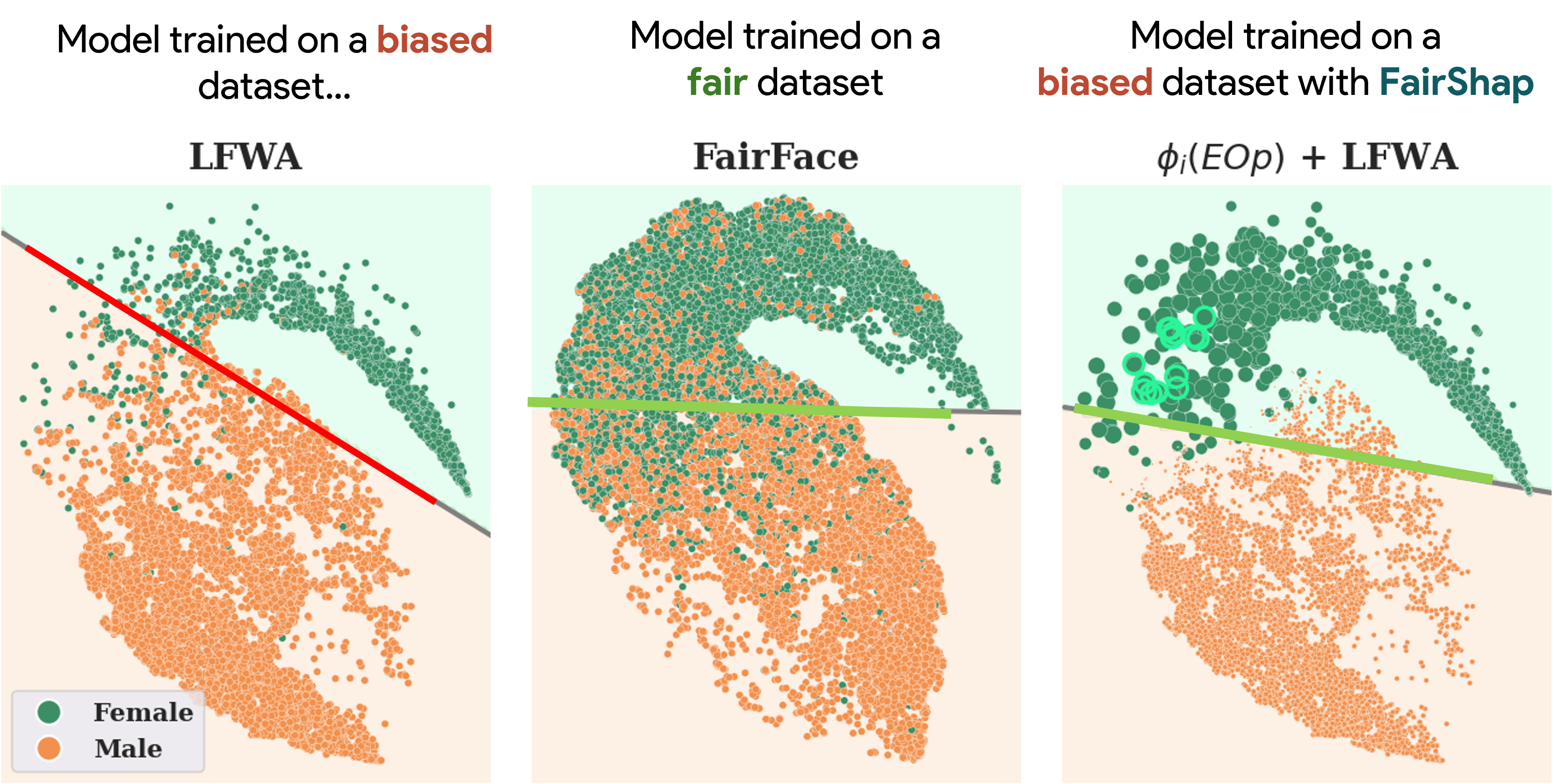

FairShap — Fair Data Valuation for Algorithmic Fairness (DMLR workshop @ ICLR 2024)

-

Challenge. In high-risk decision-making, we must prevent discrimination while preserving interpretability and robustness.

-

Key question: How much does each data point contribute to the model’s fairness?

-

What it does. Quantifies how each training example contributes to a group fairness metric, enabling auditing, re-weighting, and data minimization (pruning) with minimal utility loss.

While most fairness metrics quantify group disparities, they do not explain where those disparities come from. FairShap introduces a data valuation approach that quantifies how each training example contributes to a model’s group fairness metric. It provides interpretable diagnostics and actionable insights for auditing, re-weighting, and data pruning, enabling practitioners to identify which samples disproportionately drive unfair outcomes and to adjust them accordingly.

To evaluate fairness in decision-making contexts, we focus on canonical group-based metrics such as Demographic Parity (DP), Equal Opportunity (EOP), and Equalized Odds (EOds), which are widely adopted in regulatory frameworks and ethical guidelines and even standards.

- Statistical Parity (SP): equal acceptance rate across protected groups.

- Equal Opportunity (EO): equal true positive rate (TPR).

- Equalized Odds (EOds): equal TPR and FPR.

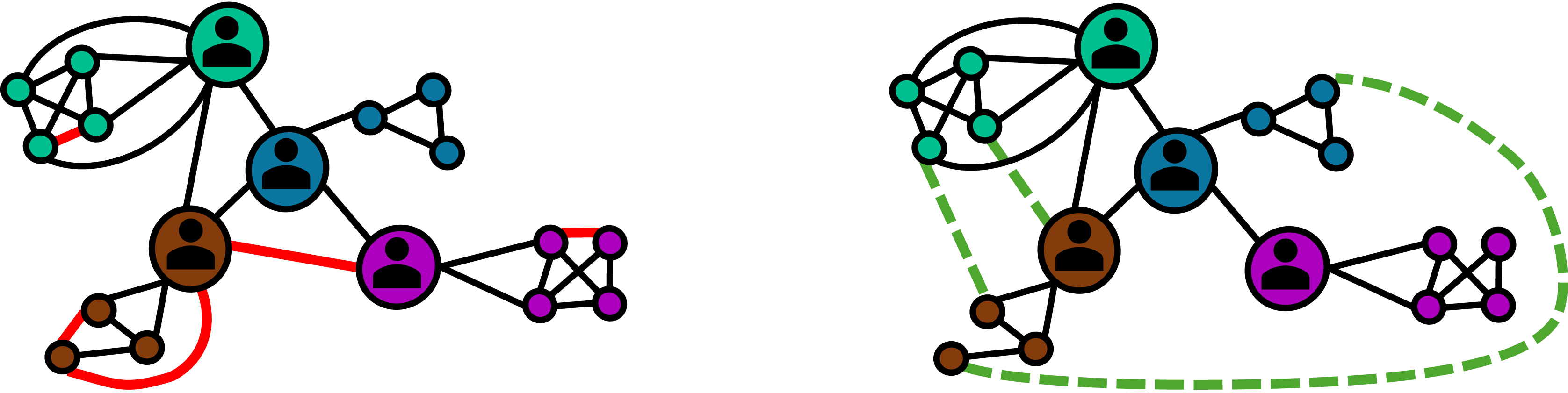

Structural Group Unfairness (SGU) — Fairness in Networks (ICWSM 2025)

-

Challenge. In social networks, inequality can emerge from the structure of connections itself, creating disparities in visibility and influence.

-

Key question. How can we measure and mitigate structural bias in network connectivity and information diffusion?

-

What it does. Add weak connections to reduce polarization and connect minorities. Uses effective resistance to quantify group isolation, diameter, and control disparities across communities, and introduces ERG, an edge-augmentation strategy that adds weak ties to reduce polarization and equalize information access.

Algorithmic fairness in social networks introduces new challenges, as inequalities can emerge not only from model parameters but from the structure of the network itself. Structural Group Unfairness (SGU) quantifies disparities in social capital and information access using tools from spectral graph theory, particularly effective resistance, to capture structural imbalances in connectivity.

It defines measurable notions of group isolation, network diameter, and information control, revealing when certain communities are systematically disadvantaged in visibility or reach.

To mitigate such disparities, we propose ERG, an edge-augmentation strategy that introduces “weak ties” — new, low-weight connections that bridge segregated groups — thereby improving both fairness and overall diffusion efficiency.

This work builds on a broader line of research that examines the theoretical foundations and challenges of Graph Neural Networks (GNNs)—including phenomena such as over-smoothing and over-squashing. Works featured in LoG 2022, MLG @ ECML-PKDD 2025 (Best Paper Award), and tutorials at LoG 2022 and ICML 2024 (with Ameya Velingker, >1000 in-person attendees) provide the graph-rewiring and information-flow foundations that make possible structural fairness interventions and long-range reasoning in GNNs.

2. Use Sphere: Human–AI Collaboration

Human–AI teams can outperform humans or algorithms alone — or do worse due to over-reliance or mistrust. We design collaboration to be complementary by construction.

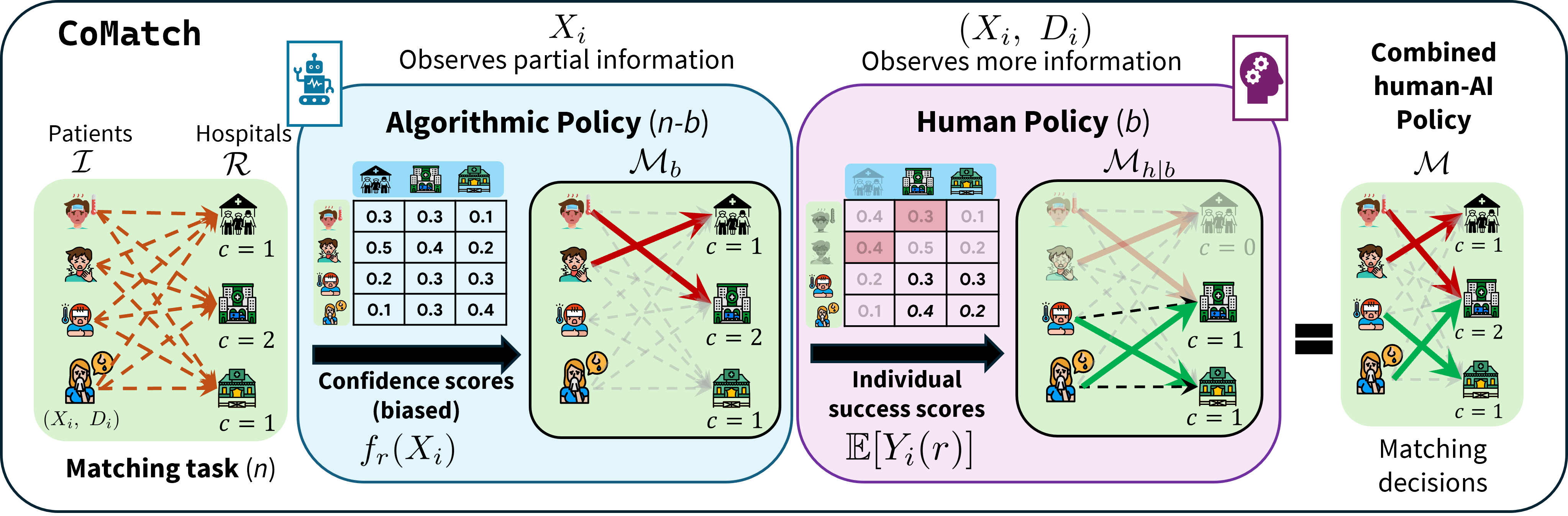

CoMatch — Human–AI Complementarity in Matching Tasks (HLDM workshop @ ECML-PKDD 2025)

-

Challenge. Human–AI teams can either outperform or underperform their individual components. In resource allocation and matching tasks, excessive automation or over-reliance can lead to new harms instead of efficiency gains.

-

Key question. How can we design AI systems that collaborate effectively with humans rather than replace them?

-

What it does. Proposes CoMatch, a framework where the algorithm handles confident assignments and defers uncertain cases to humans. A bandit-based optimization (UCB1) dynamically tunes this division of labor, achieving higher joint utility and adapting to each user’s performance — thereby operationalizing human oversight and complementarity by design.

The study involved 800 participants and 6,400 decision tasks in a time-bounded setting.

Participants completed algorithmically pre-matched resource allocation problems, deciding how to assign remaining elements within strict time constraints.

Results showed that hybrid teams following the adaptive hand-off strategy achieved higher fairness-adjusted utility than human-only or algorithm-only baselines.

This line of work connects to the Trustworthy AI requirements of technical robustness, transparency, and human agency and oversight.

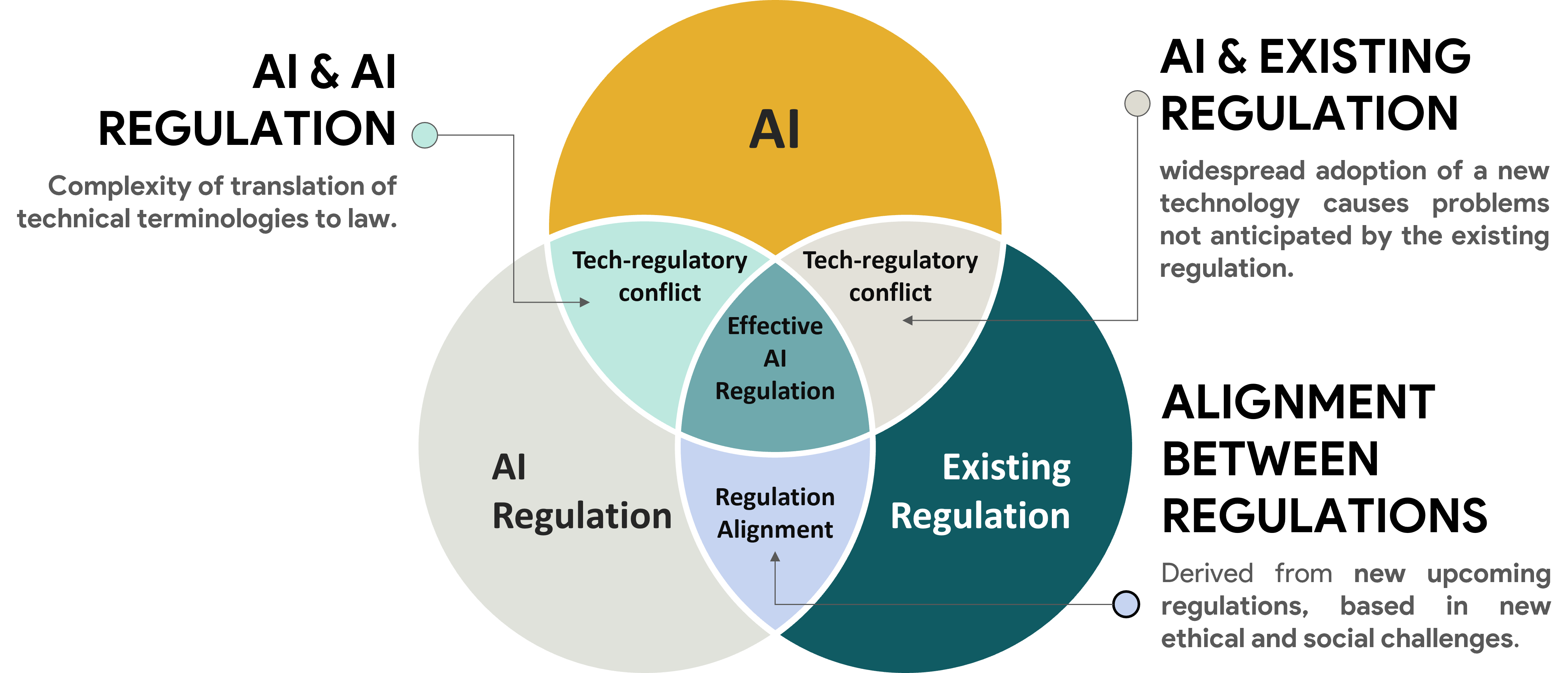

3. Governance Sphere: Regulation and Societal Alignment

The governance dimension of Trustworthy AI focuses on translating ethical and technical principles into legal practice.

While regulations like the AI Act or the Digital Services Act aim to make AI accountable, their effective application depends on how well technical design, human oversight, and legal interpretation are aligned.

However, these domains evolve at different speeds and with different vocabularies.

This creates techno-regulatory conflicts, where ethical aspirations, legal mandates, and technical realities diverge.

The governance sphere addresses these frictions and explores how to design AI systems that are compliant by design.

This line of work—carried out in collaboration with Julio Losada Carreño—applies the Trustworthy AI framework as a regulatory anchor in the labor domain.

It shows how ethical requirements translate into sectoral law, for instance:

- Human agency and oversight becomes the right to human review in automated decisions.

- Technical robustness aligns with product safety and occupational risk prevention.

- Transparency links to explainability obligations under the AI Act.

Effective AI Regulation — Trustworthy AI and Labor Law Alignment (RGDTSS & RLDE)

-

Challenge. Legal frameworks and AI systems evolve at different speeds, creating conceptual and normative gaps that complicate accountability. In AI-based worker management (AIWM), this tension is magnified by structural power asymmetries between employers and workers, where algorithmic opacity can obscure responsibility.

-

Key question. How can AI systems in employment contexts comply simultaneously with Trustworthy AI principles, the AI Act, and national labor law?

-

What it does. This line of research makes two complementary contributions:

- (1) Mapping intersections and synergies between the Trustworthy AI framework, the AI Act, and Spanish labor law, identifying how ethical requirements such as fairness, transparency, and human oversight can be operationalized through existing legal provisions.

- (2) Addressing a terminological and epistemic clash between law and technology: the correlation–causation dilemma, where machine learning operates on statistical associations while legal systems demand causal reasoning and evidence of intent (the principle of sufficient reason).

- Together, these works develop a tripartite taxonomy and a set of technical–legal guidelines—covering fairness, explainability, semi-automation, oversight, and AI literacy—towards effective and trustworthy AI regulation.

The first contribution establishes Trustworthy AI as a regulatory anchor, connecting three complementary pillars:

- TAI principles, which express ethical and human-rights-based values;

- the AI Act, which codifies those principles into risk-based obligations; and

- sectoral law, such as Spanish labor law, which governs specific domains like employment relationships.

We found synergies and misalignment across these layers:

- By aligning these layers, the research identifies concrete synergies: for example, human agency and oversight in TAI corresponds to the worker’s right to human review; technical robustness relates to occupational safety regulations; and transparency resonates with the duty to inform workers about algorithmic decision systems. This analysis demonstrates how abstract principles can be translated into enforceable rights and duties.

- The second contribution addresses the epistemic misalignment between statistical inference and legal causation.

In legal reasoning, decisions must rest on causes that can be justified and audited.

AI systems, however, rely on patterns and correlations that lack intrinsic causal meaning.

This “correlation–causation dilemma” threatens to undermine legal principles of justification, equality, and accountability.

The study offers conceptual and methodological guidelines for bridging this gap—such as documenting data provenance, integrating causal reasoning in model design, and improving the explainability of AI-based decisions.

Together, these contributions form a coherent framework for effective AI regulation, showing how technical design, ethical guidance, and legal accountability can be harmonized to ensure that AI systems not only perform well but also act in accordance with democratic values and fundamental rights.

Closing Thoughts

Trustworthy AI is not a property of algorithms alone — it is a sociotechnical system shaped by data, humans, and institutions.

Achieving it requires:

- Measurable practices (metrics, audits, explainability),

- Legal alignment (sector-specific adaptation, accountability), and

- Shared responsibility among engineers, policymakers, and society.

Trust emerges when technical excellence meets ethical and legal coherence.

Our scientific publications

2025

Alicante, ES

Porto, PT

Copenhagen, DK

2024

Vienna, Austria